Last episode of this little story of scrum, variation on a well-known theme.

Our iteration is progressing. We arrive at the Friday of the second week. The afternoon is the review that we also call the demo, but we don’t do a demo, we do the review of the work completed, it’s different, so I prefer review. No sorry: not of the work completed, of the work done. Done: tested, validated, that respects the definition of done and that has been validated by the product owner.

At 2pm and for say 45 minutes we will therefore do the review (that we can prepare a bit before, at the end of the morning, if we are good students, someone good, not a scoundrel, a bad boy). During it we show the done work. And only that. No question of showing something in progress. What is in progress will perhaps never be done, or differently. And to have good debates, good trade-offs, real visibility, real feedback (funny the feedback, we don’t have a really suitable French term, it’s symptomatic. I like the link with learning): in short, we show what we have and NOT what we DON’T have. No middle ground. We are always taken for cowboy hippies but on this point we are binary. Reading the project/product is thus eminently facilitated. And since done elements can potentially be put into production we know exactly what we have and what we don’t have. No artistic blur on 80% of a feature in progress: that doesn’t mean anything.

So what happens during the 30/45mn of the review? Let’s say there is a 5mn opening: “here’s the state of things, here’s what we projected for this iteration, here’s the state of our platforms and our automated tests. Naturally at any time you can connect to the continuous integration platform to play with our product”. Then we do the review of done things, the best is that it’s the product owner who does it (especially the first iterations at a minimum, so he really feels that he belongs to the team, and that when he validates he thinks about his review…). So the review especially at the beginning is led by the product owner (afterwards another person can do it, when the habit is taken). Naturally no slides, we want done things, we show done things, on something real. If we can’t show, we can’t do. Be inventive.

If in the definition of done there is written high availability count on me to unplug the server live with everyone, normally the review can continue on another server since we say we do high availability. Or if there is written in the definition of done: mobile apps and website, count on me to stop the review on the site and ask to see it on the mobile application. I know these scenarios are hard for the team, but it’s so much better to be caught in default during a review than in production. And we feel so much more zen when we’ve pushed the quality level all the way. And we gain so much speed when this quality offers a solid foundation. So during ~20 minutes the product owner does the review of done achievements (that he validated as they came during the iteration), and asks for feedback. The review is the place for feedback. Let people speak now or forever hold their peace (for better and for worse…). They can’t shouldn’t say they didn’t know. The last 5/10 minutes of the review opens onto what’s next: we propose a release plan (that is updated every iteration) and that projects the estimated realization on a calendar. We’ve observed a cadence, we reproduce it, and that tells us where we should be on such or such date. It’s very effective, and it works relatively well: no estimation but the reproduction of an observed cadence, it works relatively well also because the reading is clear: what we have we have, and what we don’t have, we don’t have. And since all elements are sliced to have meaning in a fairly autonomous way, reading the state of affairs of the project/product is effective. To that I add that there shouldn’t be bugs, and that we apply the definition of done that takes care of the key elements of the project/product (load? performance? etc.) so no surprises.

And if we really discovered a bug during the review? It happens, rarely normally, but it happens. It comes to the top of the backlog, of the expression of need, and so we treat it as priority 1. Finally, that’s my philosophy, then the product owner can decide differently as long as he assumes responsibility.

Another subject, the review is the opportunity for all concerned people especially sponsors to be informed. Half an hour every two weeks. If despite everything no one comes, propose stopping the project/product. A dialogue of this kind with the sponsor will ensue: “We stopped the project”, “What? Why?”, “No one comes to the reviews, it means it’s not important, we might as well focus on important things”, “But yes it is very important!”, “Wait I don’t understand either it’s very important and people come for half an hour every two weeks, or it’s not and we might as well stop it”. Naturally it’s easier to carry as dialogue for an external like me, but believe me, it works.

45 mn, 30mn, the 45mn are a timebox eh. All scrum rituals are timeboxes: we can do shorter, but we don’t exceed (the duration of the iteration, the sprint planning, etc.). We learn to function optimally in this framework, in this box. And each box takes place at the same time, with the same objective, the same input elements, and the same output elements. Hence the idea of rituals. No need to sacrifice goats.

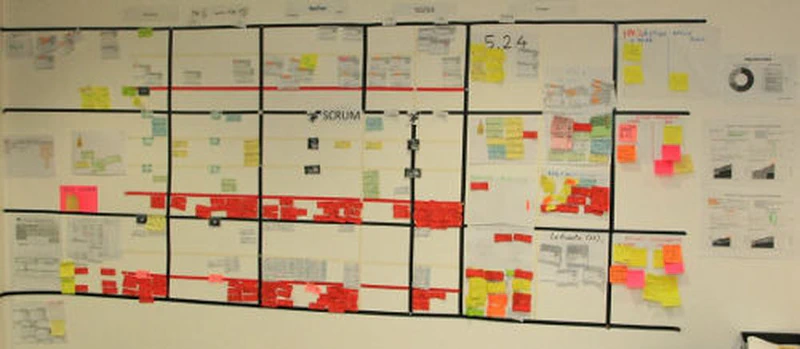

Here a sort of Scrum and Kanban wall that seems messy but that worked quite well (click to enlarge).

Little moment of relaxation after the review, then it’s the retrospective’s turn.

The retrospective is a sanctuary, it is reserved for the team (the developers – in the sense of creators – of the product –in its entirety, not just the code–, the product owner, the scrummaster). To improve we need to speak frankly to each other, to speak frankly within the team, we need to know that debates will stay between us, and that no external person or manager is present. However if the team requests it, everything remains possible.

The retrospective is therefore the place for continuous improvement, for that we must question ourselves. Generally for two weeks of iteration, the retrospective lasts 2h. The first 20mn are devoted to remembering what happened during the two past weeks (a time line with facts for example), then the next hour serves to question ourselves, bring out questions, successes, failures, frustrations, pleasures, etc. We take 5/10mn to choose what challenges us most: either the pain point we want to resolve, or the positive point we want to propagate. Then we spend the last half hour finding how to resolve it, or propagate it, in a factual, measurable, realistic way during the next two weeks. The pitfalls of the retrospective: a) wanting to change the world in two weeks, launching into five improvements in the next ten days, unrealistic and frustrating, better to focus on a single point; b) never changing format, always the same conversations and points of view return, irritating and frustrating, it’s the scrummaster’s job to manipulate different formats that will make people see things under new angles regularly; c) too much focus on negative aspects to improve: focusing on positive points and propagating them is just as effective, even more! ; d) Giving ourselves a simple and realistic action plan that can be carried by the team, and not a complicated plan, non-measurable, that is linked to external people, it’s frustrating and useless.

There, Monday it starts again: sprint planning first part, 2h30, we project ourselves on a set of elements to realize, the afternoon the team breaks down into tasks, we make the burndown and the burnup, that we follow during the week, every day we have our daily standup, the product owner validates user stories as they come, one or two refinement meetings (backlog grooming) allow us to anticipate the next sprint planning, our corpus of automated tests grows, second Friday review and retrospective. Then Monday after sprint planning, and off we go again. A well-applied Scrum gives off a strong impression of cadence and rigor, almost like Taylorism. Except that teams are masters of their destiny. That changes everything. I’m used to having fun saying that if you unplug scrum teams you have headless chickens: where is my daily? What are we working on for the next two weeks? to reinforce the idea of cadence. This cadence we like it or not, we need release cycles. Don’t be surprised if every six months, like a sports team, your Scrum team needs rest. Let it. Never forget sustainable pace. And that we don’t go faster by going faster but by going differently.

This rigor is present because the Scrum tooling is compact, in the head.

When several teams coexist? Well I suggest not synchronizing them at the calendar level. It’s my experience, and I haven’t seen the opposite work as well. Why? I prioritize the presence of sponsors and end users during reviews above all else: learning, feedback. Impossible to have these people who would follow 10 teams, or 10 times 45mn on the same day. Too heavy, indigestible. To those who defend the idea that it’s enough to do 2 hours for all teams: I find it a lack of respect and lack of empowerment of teams, it’s my experience, my point of view. So I orient myself rather toward alternating weeks, days, to allow all reviews to have a real existence, with real sponsors, end clients (potentially), and real feedback/learning.

Articulate several teams? We generally use consolidated release plans and/or a portfolio kanban.

Articulate an organization around a product or project in scrum? some holacracy ? your own flavor of liberated company, your choice. But don’t forget the basics of this complex world.

An agile transformation?

- What is a successful agile transformation ?

- Path of an agile transformation

- Paradoxes of agile transformations

Retrospectives?

The three episodes of the series and the survival guide:

- Once upon a time scrum #1

- Once upon a time scrum #2

- Once upon a time scrum #3

- Agility survival guide (glossary, short descriptions of rituals, bibliography, etc.).

To finish, Scrum, we don’t care. Kanban we don’t care. XP we don’t care (but everyone says that unfortunately). Above all things think about foundations: the intention and attitude of those who have power in the company and the physical means of your teams and products: co-location or genuine virtual space, type of product, market, deployment, etc. After Scrum, Kanban, XP are accomplished, honed toolings, that it’s very good to use to make a difference.